Hi, I'm Kevin Wang

A

I am a PhD student at the University of Texas at Austin, advised by Prof. Atlas Wang at the VITA group.

I develop multi-agent LLM systems and LLM-driven planning frameworks that enable intelligent agent collaboration and strategic reasoning. My research advances AI agent communication, decision-making, and scalable multi-agent coordination.

I am passionate about creating autonomous AI systems that can plan, communicate, and collaborate effectively with humans and other agents, integrating 3D vision capabilities for transformative real-world impact.

Contact me as we explore the possibilities together!

Latest News

July 2025

-

2 papers accepted to COLM 2025: SPIN-Bench and SWIFT.

-

Leading organization of MindGames, a NeurIPS competition for multi-agent LLM.

-

Portfolio redesigned with improved mobile responsiveness and enhanced user experience.

June 2025

-

VideoLifter received the Best Paper Award at CVPR 2025's AI for Content Creation Workshop.

Publications

* denotes equal contribution

SPIN-Bench: How Well Do LLMs Plan Strategically and Reason Socially?

Authors: Kevin Wang*, Jianzhu Yao*, Ryan Hsieh, Haisu Zhou, Tianqing Zou, Zerui Cheng, Zhangyang Wang, Pramod Viswanath

Venue: COLM

SWIFT: Can Test-Time Scaling Improve World Foundation Model?

Authors: Wenyan Cong, Hanqing Zhu, Peihao Wang, Bangya Liu, Dejia Xu, Kevin Wang, David Z Pan, Yan Wang, Zhiwen Fan, Zhangyang Wang

Venue: COLM

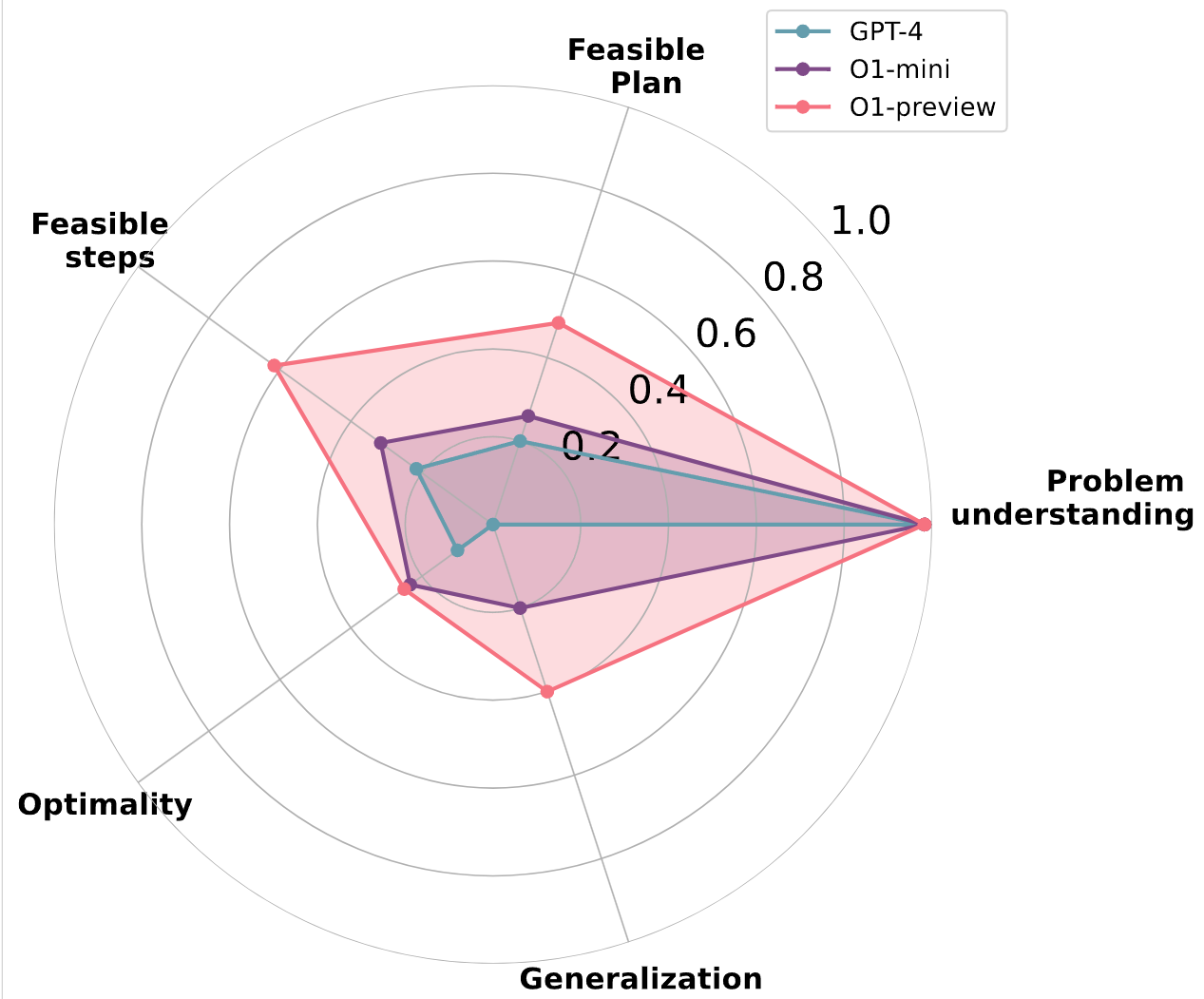

On The Planning Abilities of OpenAI's o1 Models: Feasibility, Optimality, and Generalizability

Authors: Kevin Wang*, Junbo Li*, Neel P Bhatt, Yihan Xi, Qiang Liu, Ufuk Topcu, Zhangyang Wang

Venue: NeurIPS Workshop

Authors: Wenqing Zheng*, S P Sharan*, Zhiwen Fan, Kevin Wang, Yihan Xi, Zhangyang Wang

Venue: PAMI

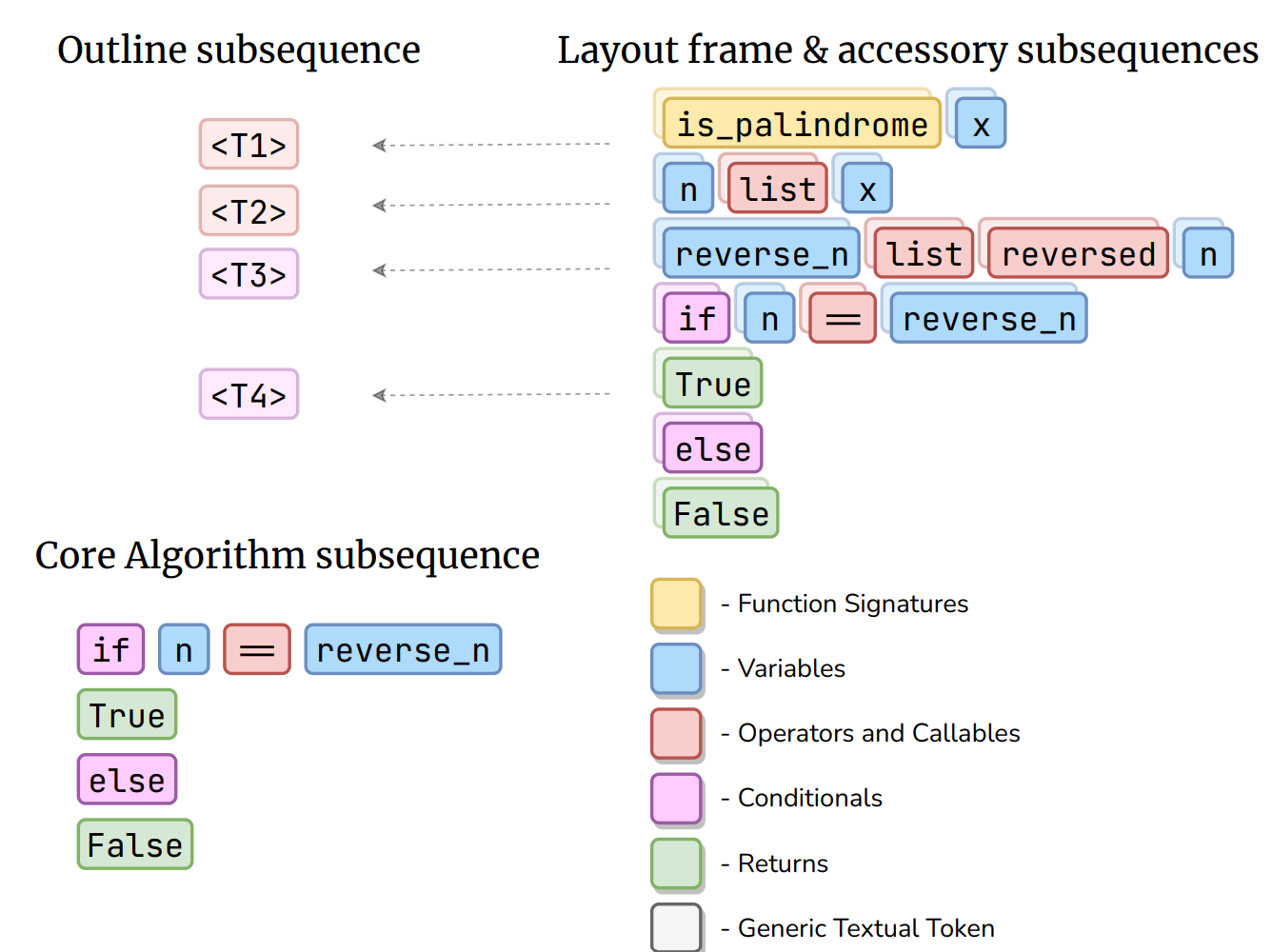

Outline, Then Details: Syntactically Guided Coarse-To-Fine Code Generation

Authors: Wenqing Zheng, S P Sharan, Ajay Jaiswal, Kevin Wang, Yihan Xi, Dejia Xu, Zhangyang Wang

Venue: ICML

Experience

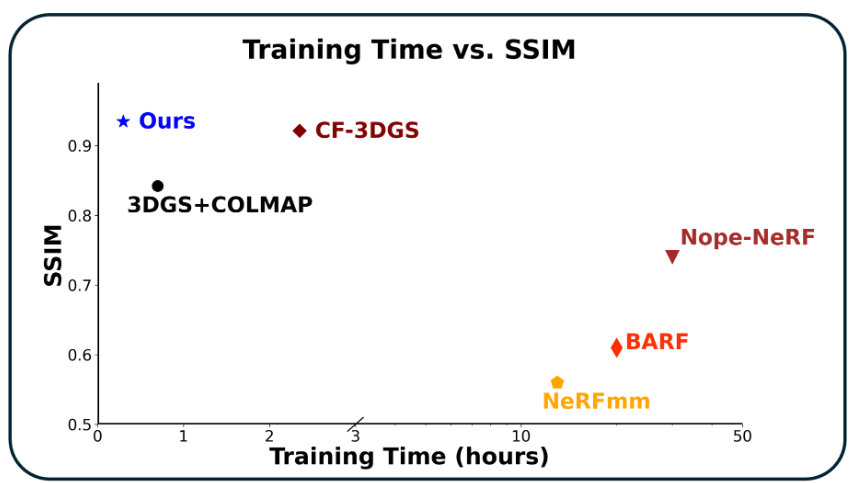

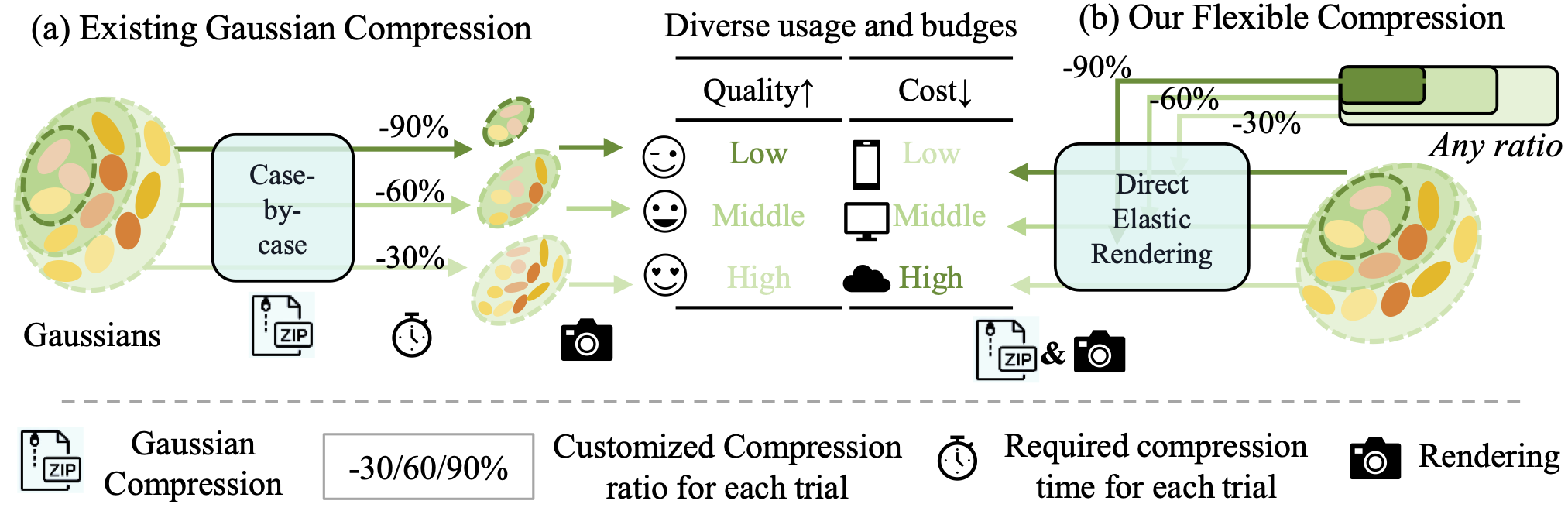

- Proposed and implemented an architecture that compressed 3D reconstruction scenes by 15 times compared to the 3D Gaussian model through pruning, distillation, and quantization (400+ stars on github, under CVPR Review)

- Implemented a transformer-based language model that produces syntax error-free python codes, and reaches a state-of-the-art performance with significantly fewer parameters. This work was published at ICML

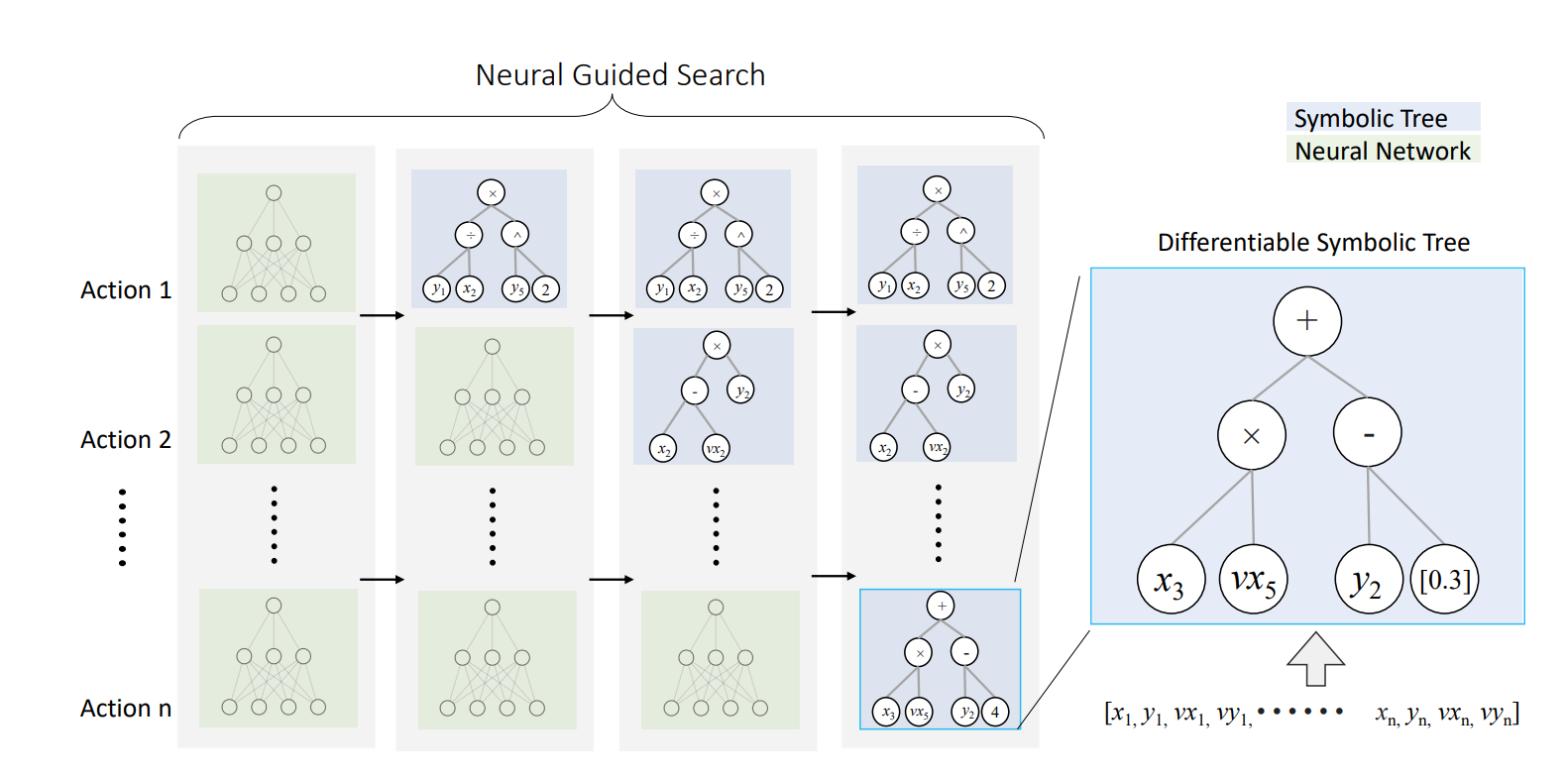

- Built an innovative algorithm for extracting symbolic representations from visual RL (paper, TPAMI minor) Conceived and implemented the neural guided searching algorithm that is partially differentiable, resulting in increasing scalability of symbolic RL

- Tools: Pytorch, OpenCv

- Enhanced SparkCognition malware detection with dual-layer classification models, using static models for targeted file types and a dynamic model to catch data misclassified by static models.

- Static models: Conducted feature contribution analysis in existing static ML models by pruning noisy features, empirically adding novel features, resulting in runtime reduced by 40%. Leveraged Kubernetes for efficient build and distributive training, reducing training time for static model by 30%.

- Dynamic model: Developed a novel dynamic ML model in Python using LightGBM and translated it to C\# production code, achieving 95\% precision in detecting unseen malware.

- Tools: Python, C#, .Net, Kubernetes

Education

Austin, USA

Degree: Ph.D. candidate in Electrical Computer Engineering advised by Prof. Atlas Wang

Austin, USA

Degree: Bachelor of Science in Computer Science and Bachelor of Science in Mathmatics

GPA: 3.78/4.0

- Computer Vision

- Machine Learning

- Reinforcement Learning

Relevant Courseworks: